Genome Editing and the ethics of risk. When is a risk morally acceptable or not?

By Dino Trescher, EUSJA

Science and Technology are part of the daily modern life and impact on most human beings, both in the developed and developing world. As the French philosopher and sociologist Bruno Latour argues: “Technology is society made durable”[1]. This is why stakes in these fields are high.

The most recent ethical controversy involves the concept of life in itself and the evolution of biosciences and biotechnology: genome editing. The European debate on genetic modification e.g. in agriculture, stem cell research etc. is a well-documented example of public controversy and of the ‘ethicization’ of the public discourse on science, technology and innovation[2],[3],[4]. For many years molecular biologists have been looking for a technique to repair cellular processes and to intervene and modify the DNA of organisms by a “guided genome editing”, changing the organism genome by introducing a new function or correcting a mutation.

Then, in the last years, CRISPR/Cas9 technology appears and has been chosen as the most powerful and easy method for genome editing due to its high degree of fidelity, relatively simple construction and low cost. CRSPR tool is attractive and can be used by any molecular biology lab; on the other hand it can be used for any purpose, to modify the genome of plants, animals or humans. No regulation has been developed at international level. This is why the application of genome editing techniques is of public interest and strongly influenced the agenda of a trilateral meeting of German, French and British national ethics councils, so called NECs, in Berlin in October 2016.

Referring to the normative implications of genome editing in Germany, Reinhard Merkel, member of the German Ethics Council, emphasized that, “we are at the brink of a new debate about readjusting the law of embryo protection” – above all in regard to the new CRISPR technique and what it promises, or threatens”. The key question rising: should we alter human DNA in embryos using gene-editing technologies? He argued that “a fundamental constitutional right of the protection of born human beings is touched. Therefore biosciences and biotechnologies raise basic ethical problems. Facing scientists, legislators and ethicists with the famous Kantian question: what we ought to do?”

CRISPR/Cas9 is an example for new and emerging sciences and technologies (NEST) that involve a “new paradigm” of the future, the convergence of scientific domains in nano- bio-info-cogno sciences (NBIC). These are fields, as some researchers in science and technology studies argue, where we have reached an era where science is in so-called post-normal stage. There, “facts are uncertain, values in dispute, stakes high and decisions urgent,” as Silvio Funtowicz and Jerome Ravetz describe in their article Science for the post-normal age. So at the interface of new sciences, technology this is why core issues for societies raise around different forms of knowledge, and the ethical concerns that need to be debated, and decided upon.

The meaning of ethics assessment

At the very heart of the scientific endeavour is knowledge and its co-creation, and this is where at least publicly funded research and innovations projects need to be in line with what has been defined as “responsible research and innovation”, and also as “science for and with society”, because handling uncertainties and risks in society requires all actors to cooperate. This is where knowledge creation, which later can be applied into new technologies, is strongly bonded to ethics and ethics assessment.

Ethics and risk are two concepts that frame scientific knowledge and produce two different types of assessment, as their evaluation has some overlapping but also many differences. These are complex concepts that are used in a multiplicity of meanings or, as we prefer here, conceptions. Before we delve deeper into the relation between ethics and risks, it is useful to be more precise about both concepts and explain what key distinctions are and where ethics and risks meet.

The SATORI project describes ethics assessment as “any kind of assessment, evaluation, review, appraisal or valuation of practices, products and uses of research and innovation that makes use of primarily ethical principles or criteria” (the author is a member of the SATORI project). One particular institution where ethics assessment, or more precise ethical guidance, plays a fundamental role for many actors in biosciences, biotechnologies, is the national ethics council, NEC. At a recent public plenary meeting “Ethics Advice and public responsibility” of the German Ethics Council, Matthias Kettner, professor for applied ethics at Witten/Herdecke University in Germany, affirmed that three forms of knowledge are the fundamental ingredients of ethical discourse. In his lecture he highlighted that three forms need to be distinguished: factual knowledge, assessment-knowledge and normative-knowledge. All three forms of knowledge need to be consistent without logical contradiction to be useful in the development of the discourse in ethics. Therefore the German Ethics Council should only claim authority for morally relevant knowledge, and examine, improve, or create it where necessary –, if it is capable to put factual-knowledge, assessment-knowledge and normative-knowledge into the context of a rational discourse, which culminates in moral judgments. He added that this comprises that some or all members of the NEC can be convinced of the correctness, “not though but because they consist of criticisable reasons”. The public dialogue of the 23 members of the German Ethics Council, providing ethical advice and guidance mainly to the German Parliament, is stands pars pro toto for the work of ethics assessors, a crucial task that comes with an obligation, because at the heart of NEST there are technologies that shape our collective future on our planet. And that’s where also ethical issues rise. For an overview and detailed picture of the de facto ethics assessment landscape in the European Union and other countries with regard to approaches, practices and institutions for ethics assessment across scientific fields, different kinds of organisations that carry out assessment and different countries see the SATORI Main Report and its Annexes.

Problem solving capacities

The public discourse about NEST is focusing on the question how modern societies can come to reasonable decisions, norms, regulations and measures to deal with ambiguities, uncertainties and risks of scientific and technological innovation, and the main forms of intended and unintended consequences. And at the very core of the decisions are distinct forms of moral and ethical values, norms and principles, which serve as a base for the assessment of issues we face in our modern live. Therefore solving ethical issues is a meaningful capacity of organizations, stakeholders, citizens and last but not least decision makers, which need to take responsible, collectively binding decisions.

Another distinction needs to be considered. First, what differentiates ethical questions from moral ones? One straight distinction between the moral and the ethical is provided Tsjalling Swierstra, professor in Philosophy at the University of Maastricht, and Arie Rip, professor of philosophy of science and technology at the University of Twente in their analysis of patterns of moral argumentation in the domain of NEST. They claim: Ethics is ‘hot’ morality; morality is ‘cold’ ethics. The reason for this distinction is that people become aware of moral routines when people disobey them or when conflicts between routines emerge and a moral dilemma arises. In short: when they are no longer able to provide satisfactory responses to new problems. Morals are therefore characterized “more by unproblematic acceptance”, whereas in contrast “ethics is marked by explicitness and controversy”. It is exactly at this point, when moral patterns are not sufficient to deal with NEST, where the assessment of ethics comes into play.

Many actors in science policy are aware of the inherent contingencies and ambivalences of research and innovation activities. This is why European research funding and regulatory bodies are increasingly requiring risk and benefit analysis or risk and benefit evaluations. One of the latest developments is a report by the German Academy of Sciences Leopoldina and the German Research Foundation (DFG) on the biosecurity and freedom of research with recommendations for establishing ethics committees in safety relevant research. At the heart of any risk/benefit assessment [SATORI report on risk benefit analysis and report on a methodology], meant to help scientists and decision makers in ethics committees or ethics assessment units (EAU) allocating resources in the most effective way and dedicating efforts to those areas of concern for which it appears worthwhile to spend scarce resources, is again knowledge – more precisely, the knowledge about probabilities.

Building knowledge from probabilities

One comprehensive overview on how knowledge about probabilities can be systematized has been coined by Andrew Stirling, professor of science and technology policy at Sussex University. It is meant to serve as a tool to catalyse nuanced deliberations between knowledge of experts, who “must look beyond risk (see the top left quadrant of Figure 1) to ambiguity, uncertainty and ignorance using quantitative and qualitative methods”. He argues in a comment in Nature[5] that practical quantitative and qualitative methods already exist, but political pressure and expert practice often prevent them being used to their full potential. He argues that “a preoccupation with assessing risk means that policy-makers are denied exposure to dissenting interpretations and the possibility of downright surprise”. His core argument is that “choosing between [these] methods requires a more rigorous approach to assessing incomplete knowledge, avoiding the temptation to treat every problem as a risk nail, to be reduced by a probabilistic hammer. Instead, experts should pay more attention to neglected areas of uncertainty as well as to deeper challenges of ambiguity and ignorance”.

Figure 1: Uncertainty Matrix by Andrew Stirling. Source: Stirling, Andy 2010. Keep it complex. Nature 468, 7327, 1029–1031.

So with the knowledge of probabilities at the core, the key question is: what can we know and not know on current and future implications of new and emerging technologies? Sheila Jasanoff, professor of science and technology studies at the Kennedy School of Government at Harvard University, Cambridge, Massachusetts, USA, pointed in her article Technologies of humility: “From the abundant literature on technological disasters and failures, including studies of risk analysis and policy-relevant science, [we learn] that for almost every human enterprise that intends to alter society four key questions are crucial to address: What is the purpose? Who will be hurt? Who benefits [and how is it distributed]? How can we know?” This approach “distinctly favours the precautionary principle as a norm, because that principle takes scientific uncertainty and ignorance into account in setting policies,” Jasanoff explains in an email.

Assessing the existing knowledge and the associated probabilities leads to a crucial question at a crucial point, where key aspects of the ethics and risk discourse meet, namely in one question: How should we judge whether a risk is morally acceptable or not? Moral concerns with regards to the ethics of risk that are often mentioned include voluntariness, the balance and distribution of benefits and risks (over different groups and over generations), and the availability of alternatives. One approach to cope with these numerous issues is proposed by Sven Ove Hansson, professor at the Royal Institute of Technology, Sweden and author of the book “The Ethics of Risk”. He continues that the central ethical issue that a moral theory of risk has to deal with is the defeasance problem. The defeasance problem is considered a prima facie moral right, in short a moral obligation at first sight, not to be exposed to risk of negative impact, such as damage to one’s health or one’s property, through the actions of others. At the core of his argument is the question: What are the conditions under which this right is defeated so that someone is allowed to expose other persons to risk?” His own, preliminary solution, refers to reciprocal exchanges of risks and benefits, mainly because each of us takes risks in order to obtain benefits for ourselves. His main claim in this question is, that we “can then regard exposure of a person to a risk as acceptable if it is part of a social system of risk-taking that works to her advantage and gives her a fair share of its advantages”. To cut a long ethical research short, Hansson argues, that “exposure of a person to a risk is acceptable if and only if this exposure is part of an equitable social system of risk-taking that works to her advantage[6].

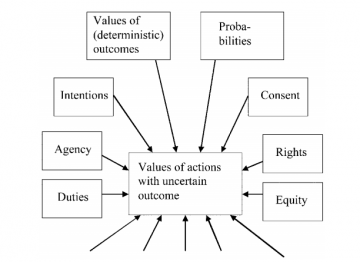

This means, that by choosing to treat each risk-exposed person as a sovereign individual who has a right to a fair treatment, and choose another standard of proof, namely that one has to give sufficient reasons for accepting that Ms Smith is exposed to the risk, and not to give sufficient reasons for accepting the risk as such, as an impersonal entity. But with the approach presented here, we have, or at least so Hansen argues, a necessary prerequisite in place, namely, the right agenda for the ethics of risk[7]. The ethical criteria for risk acceptance are illustrated by Hansson in Figure 2.

Figure 2: A more accurate view of what determines the values of indeterministic actions. Source: Hansson, Sven Ove 2012. A Panorama of the Philosophy of Risk. In S. Roeser et al (Eds.) Handbook of Risk Theory. Dordrecht: Springer Netherlands, 27–55.

Change in human agency beyond recognition

With the potential benefits, risks, uncertainties and contingencies and the necessity for precaution, there is another imperative that demands attention. Mainly because all NEST have one or another implication on human agency, our capacity to anticipate intended and unintended forms of change. Apart from the implications of genome editing we described at the beginning of this article, there is another crucial aspect, somehow related to the info sciences and the deriving information and communication technologies provide, which make systematic ethics assessment an imperative task for humanity. Julian Kinderlerer, elected member and former president of the European Group on Ethics in Science and New Technologies (EGE 2010-2016) shared his thoughts on the question “What induces change”, during a dinner conversation at the World Science Forum in Budapest in October 2015. In modern times, he answered, we are facing an acceleration of modes, the modes in which science and technology, especially new information and communication technologies, shape our world. Change is of course induced in many ways. That’s what they are built for, but they have both intended and unintended consequences. As a result modern societies are “challenged in their capacity to act”, he emphasised. He continued that, as a result, the “concept of agency is changing”. Most notably, he added, “the change in our current societies is beyond our recognition”. He pointed at two important aspects of this change: one is in the form of technologies that are going on without us having a say of what kind of change should happen: the second is “the change of human interaction perspective” that is beside the “technology induced change perspective”, he outlined. “This refers to the way technologies are shaping the human relationship between people”. He asked: “Are we, firstly, willing and, secondly, capable of dealing with these fundamental changes?” We therefore have to “recognize humans and human relationships as something significantly different”. This means risks are inextricably connected with interpersonal relationships. Kinderlerer concluded, that “we should define the business plan of societies in the future with the guiding question: where will humanity be in 20 years from now”.

This is where and why ethics assessment should and can play a crucial role in today’s risks discourses and public controversies, which are in fact mainly focusing systemic risks such as climate, energy, health, nutrition as well as financial and informational systems. All of these shape our future, probably even more than in the past. So the fundamental question that remains is to establish how all stakeholders – decision makers in science, politics, economy and last but not least civil society – which act as architects of our collective future, can be more responsible, more transparent and decide and act accordingly in view of risks and benefits, including intended and unintended, positive and negative side effects of new sciences and technologies?

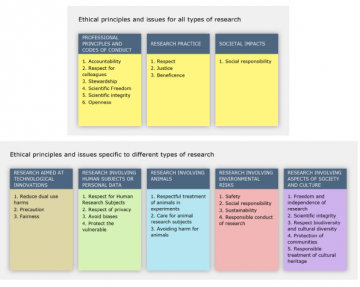

Against this backdrop, the SATORI projects serves indeed as a project with stakeholders acting together in the sense of co-creation of knowledge on ethics assessment in research and innovation projects. The researchers call for an ethical reflexivity in their work, with ethics assessment as a key element, involving the identification and assessment of ethical issues in research and innovation. The ethics assessment practice faces many challenges: “it currently lacks unity, recognised approaches, professional standards and proper recognition in some sectors of society”. But at same time, “different actors – including universities and research institutes, corporations and government organisations – are flagging the importance of ethics assessment and developing different initiatives and mechanisms to address ethical issues. This is why they propose a framework and comprehensive approach to ethical principles, norms and values, procedures and practices, called Shared Ethical Principles and Issues for all Types of Research (see Figures 3 and 4).

Figure 3: Shared Ethical Principles and Issues for all Types of Research. Source: SATORI project

Figure 4: Ethical Principles and Issues for all Types of Research. Source: SATORI project Philip Brey Presentation Milan: p. 15/24

[1] Latour, Bruno 1991. Technology is Society made durable. In John Law (Ed.) A sociology of monsters – Essays on power, technology and domination. https://de.scribd.com/document/155123130/John-Law-a-Sociology-of-Monsters-Essays-on-Power-Technology-and-Domination-1991.

[2] Bovenkerk B (2012) The biotechnology debate: democracy in the face of intractable disagreement. Springer, Dordrecht

[3] Bogner, Alexander et al (2011). Die Ethisierung von Technikkonflikten. Studien zum Geltungswandel des Dissenses. Weilerswist, Velbrück.

[4] Bauer, Martin W, Durant, John & Gaskell, George 1998. Biotechnology in the public sphere: a European sourcebook. NMSI Trading Ltd.

[5] Stirling, Andy 2010. Keep it complex. Nature 468, 7327, 1029–1031.

[6] Hansson, Sven Ove 2012. A Panorama of the Philosophy of Risk. In S. Roeser et al (Ed.) Handbook of Risk Theory. Dordrecht: Springer Netherlands, 27–55.

[7] Hansson, Sven Ove 2003:305. Ethical Criteria of Risk Acceptance. Erkenntnis 59, 291-309.

*In the original text: nicht obwohl sondern weil sie auf kritisierbaren Gründen beruht.